Week 08: Synthetic Media

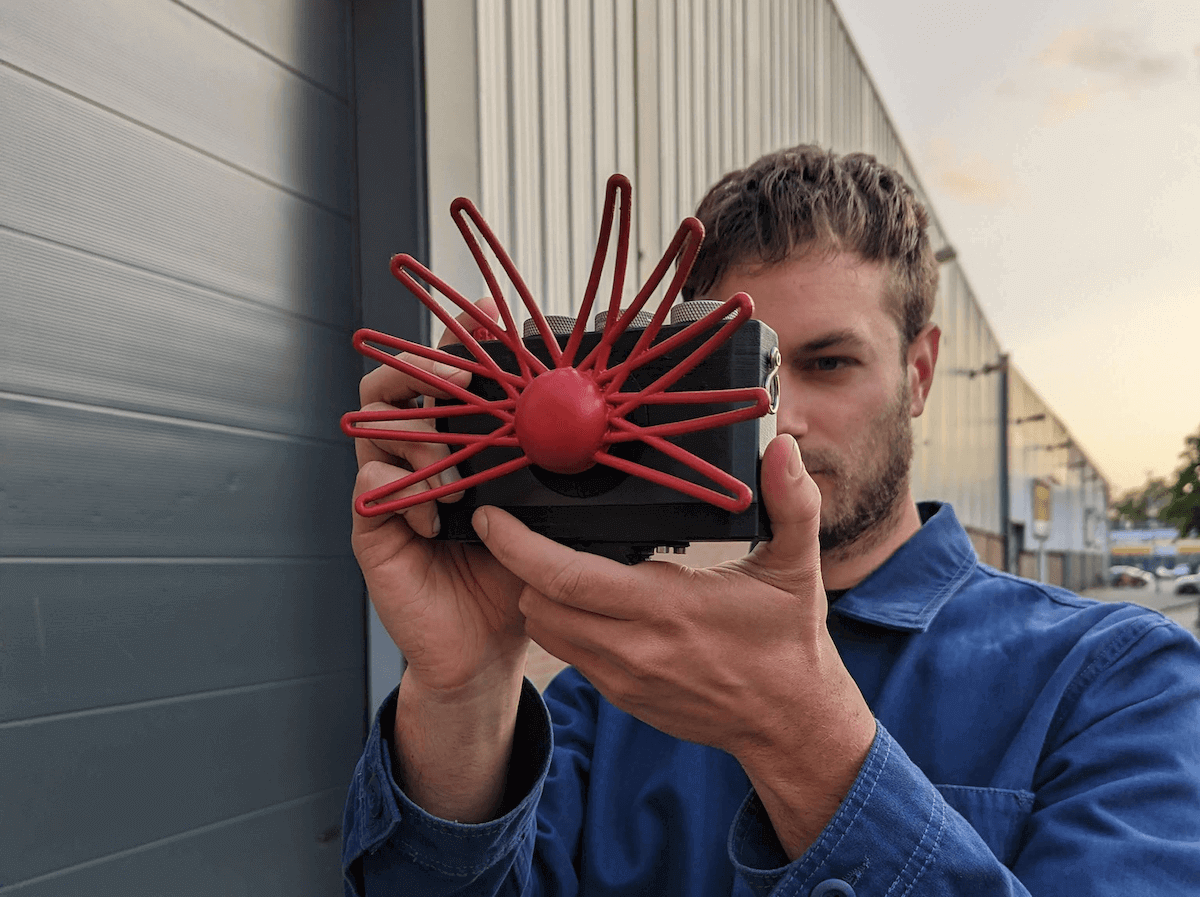

As an example of synthetic media, I looked into the work of artist Björn Karmann. Specifically his "Paragraphica" camera. This is a camera that doesn't take photos through a lens, but rather uses geolocation data taken when pressing the shutter to generate an image with a text-to-image ai model.

Why do you consider this synthetic media: This piece works with synthetic media because the images are not captured, rather generated using a text-to-image ai model.

What can you find about how this was made: To generate the images, this work uses the Stable Diffusion API. This model was trained on images from the LAION-5B dataset, which in turn gets images by crawling websites and storing the image together with the alt-text value. That is how it manages to get descriptive text with each image, because the alt-text value is written for the visually impaired to understand what the image portrays.

What are the ethical ramifications of this specific example: I believe there are not any particular ethical issues from this particular piece, apart from the ethical issues from the Stable Diffusion model and the lack of consent from the owners of the training images. With regards to what it communicates, it is interesting to think that this piece will get the closest picture to the actual location if images of said location exist online. Because the internet is ~50% in English, it is not unrealistic to think that locations where English is not spoken (or where the internet is not very prevalent) will not be represented well in the images generated by this camera. In a way it is a window into the "eyes" of the web.

Week 03

This week we focused on making a short stop-motion animation in the format of a gif. Working with Audrey, we decided we wanted to make the animation with our bodies instead of puppets or props.

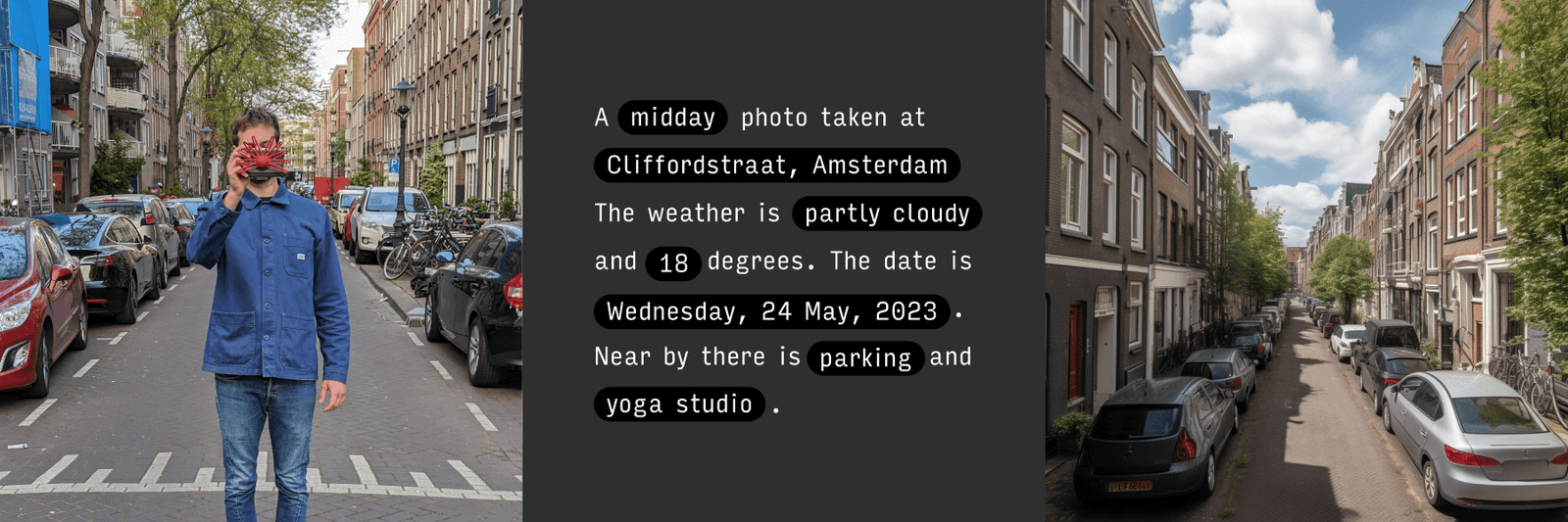

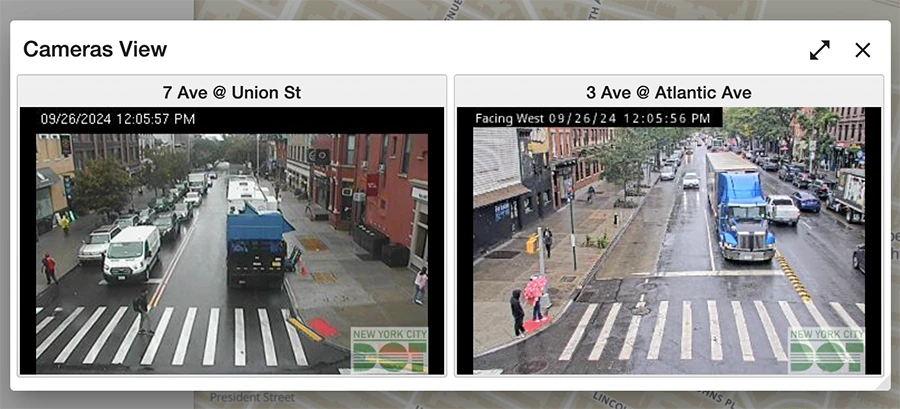

I remembered Morry Kolman's Traffic Cam Photo Booth which shows a realtime feed of nyc traffic cameras to snap pictures from your phone. We then decided to make our stop motion using those cameras instead of our own.

We switched to the Official NYC DOT website since the interface was more comfortable to record. Then, we chose two cameras in different parts of Brooklyn, that had the same perspective:

We planned out a simple animation that would connect these two places as if they were side by side and then went to each place to record.

Once we finished recording, we headed back to campus and edited the frames, aligning the two takes on top of the original interface from the nyc dot website, to make it look as if it was happening realtime:

Week 02

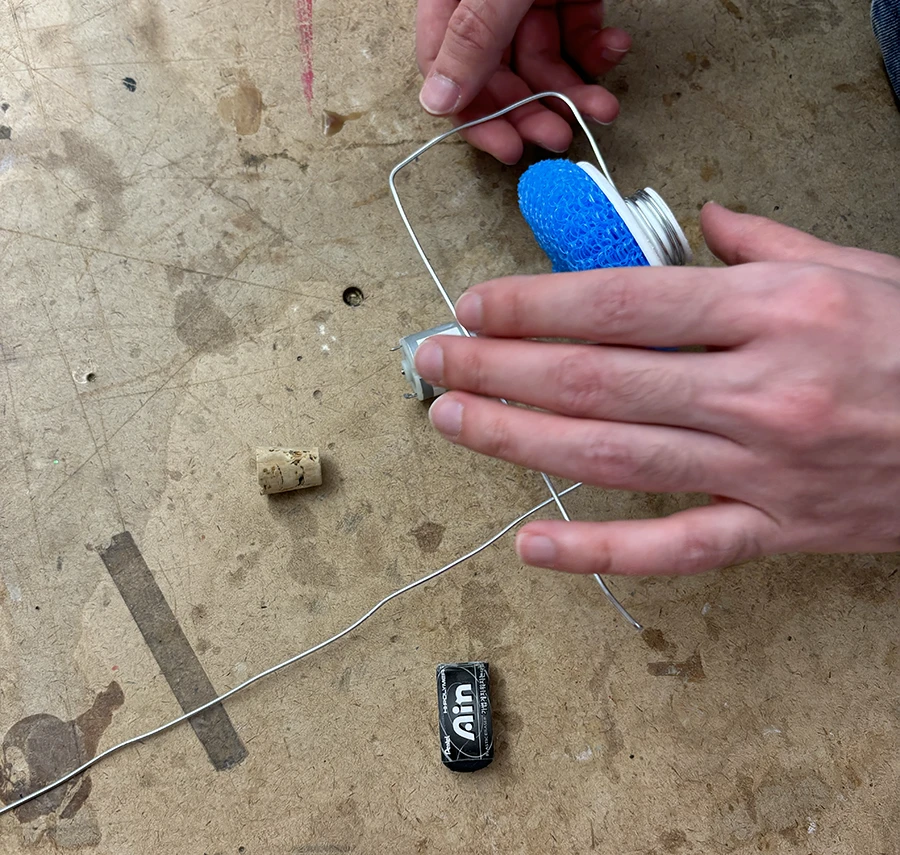

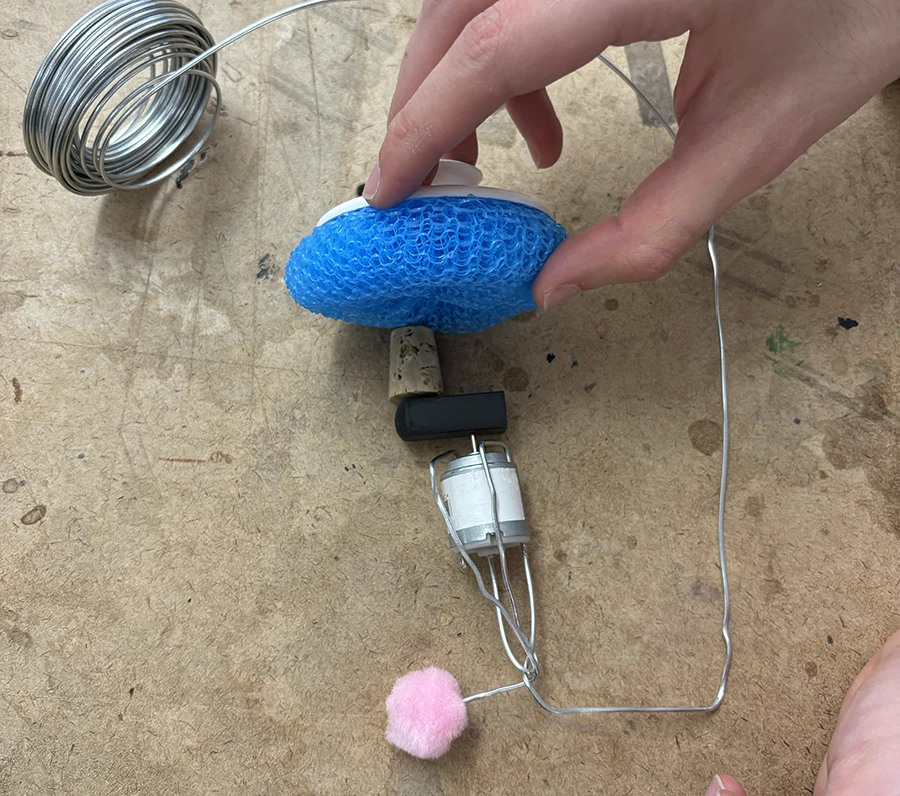

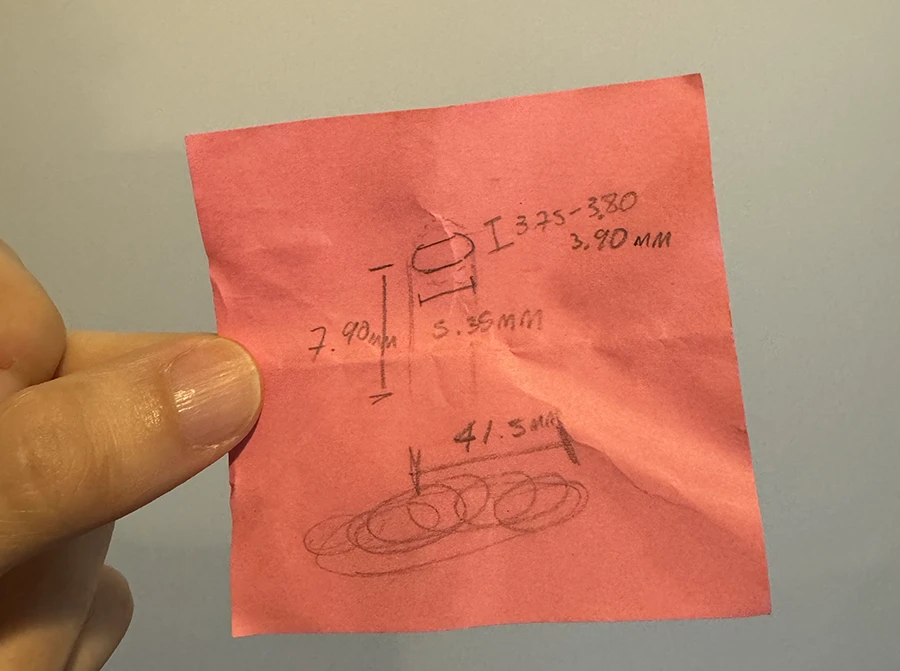

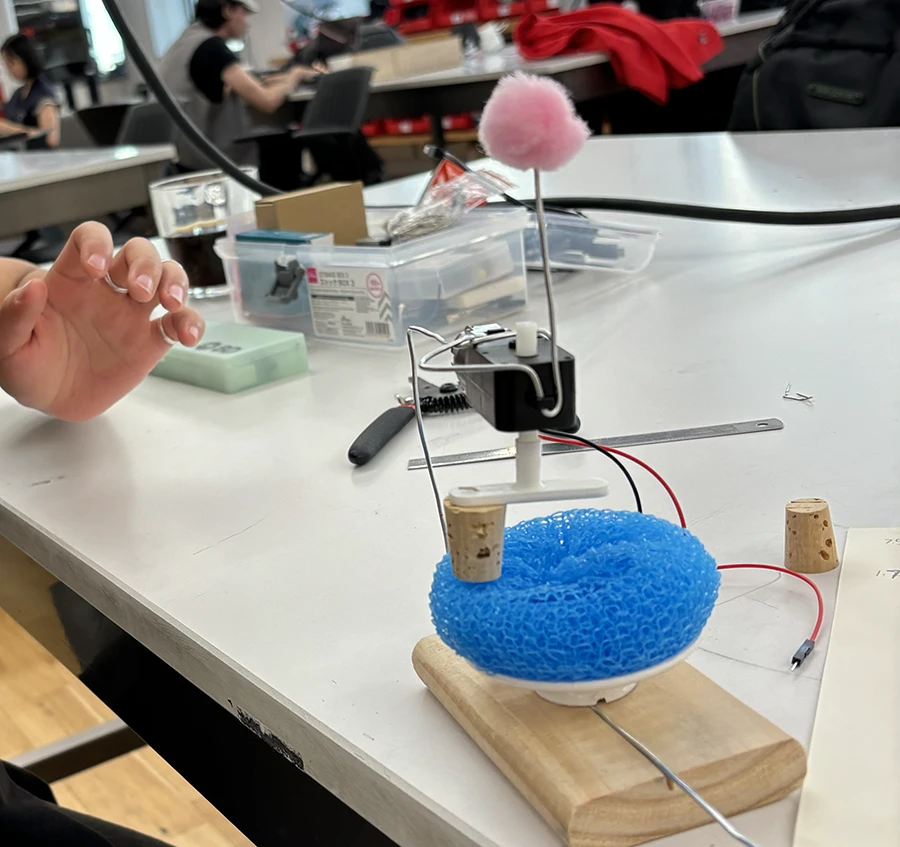

Our 2nd week assignment consisted in making a physical installation that mimics a particular sound of our choosing. We started with a trip to the hardware store, testing the sounds of some objects.

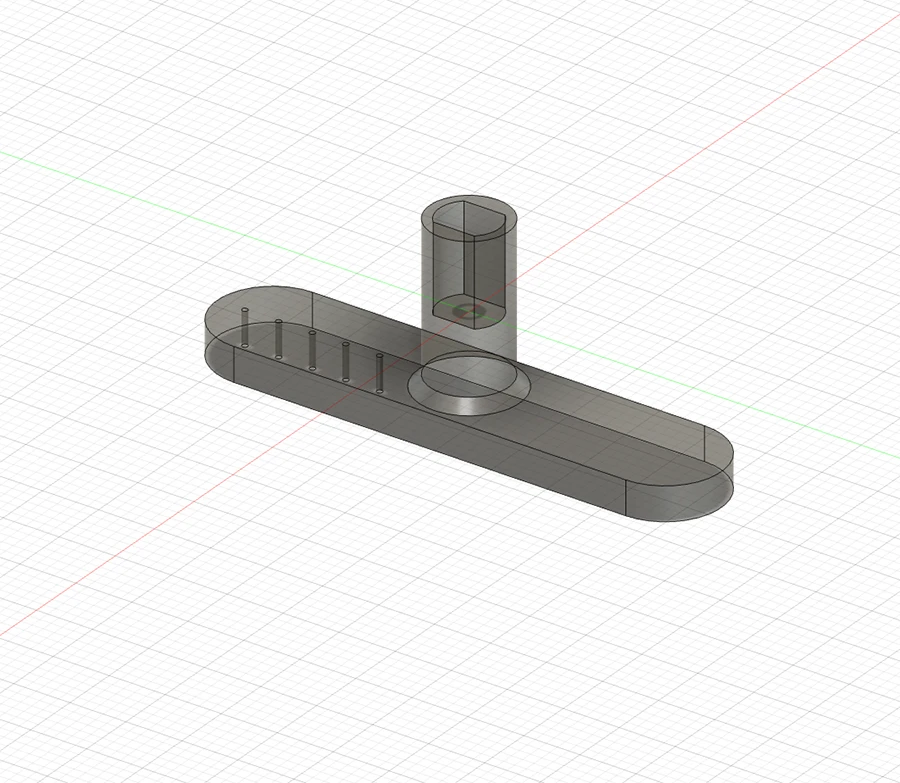

We then attempted to design and build a structure to reproduce this sound on its own.

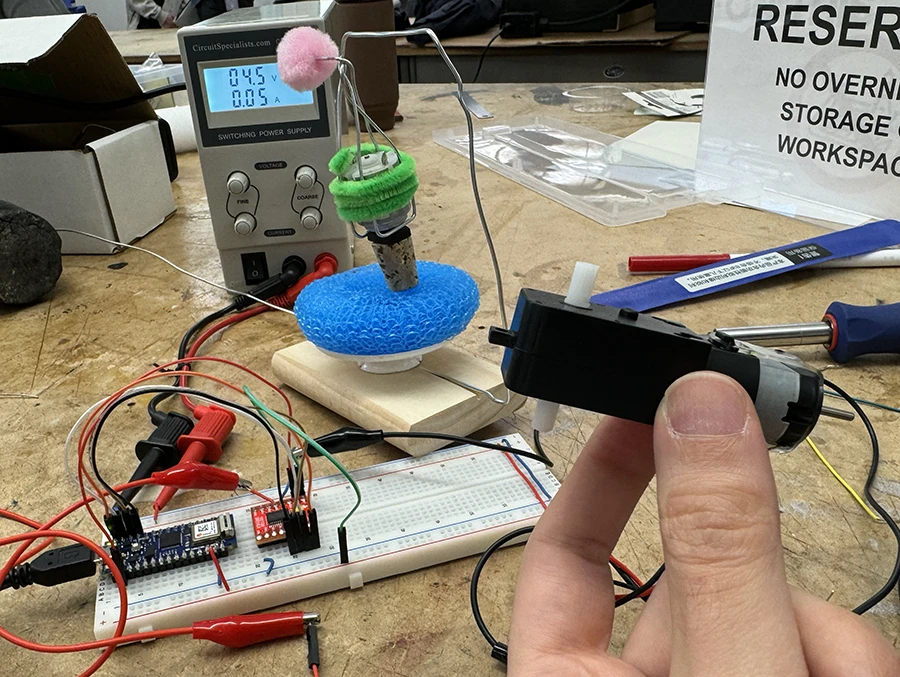

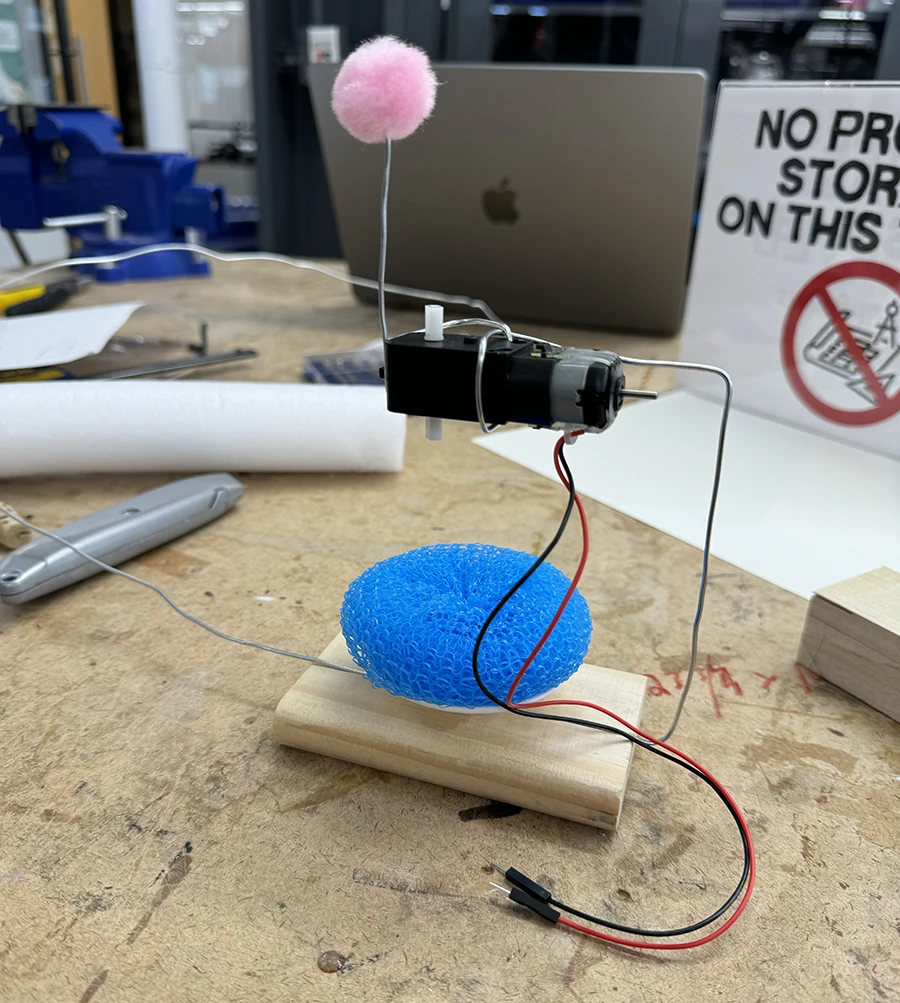

For the second iteration, we changed the motor for another type of DC motor with a gearbox that increased its torque.

This new iteration worked much better! However, due to the friction between the gears, the motor also made a much louder noise that obscured the sound we wanted to make.

Therefore we made a recording and used the 30 band equalizer to amplify the frequencies of the sponge and muffle the frequencies of the gearbox, in order to get closer to the sound we wanted.

Week 01

For the first week, our assignment consisted of recording a series of sounds that represented different concepts. Together with Jinnie Shim & Ray Wang, we went out on a scavenger hunt and recorded the following audios.

We also got started on our project for next week, which will be documented on the week 2 blog post.