Week 11 Finishing the API and setting up MQTT client

Setting up an MQTT client

To make a simple shiftr.io mqtt client, I modified the first example linked in the class site for use with an ESP32-S3 Feather board. I just needed to do a few changes to get it running:

- Swapped the wifi libraries for

WiFi.hfor use with ESP32 - Changed

WiFiSSLClient, which is not a type in this other library, toWiFiClient. This also meant I swapped to an unencripted connection, which I felt was simpler to get things running at first. - Changed port to

1883used for unencripted MQTT connections. - Changed the sensor readings for just a random number generator for now.

- Used the esp method to get the mac address, otherwise I was only getting zeros.

After some trial and error to get these settings, i got it working.

The public shiftr.io broker ui a bit confusing when monitoring what I publish so I installed the mosquitto command line software to subscribe to my topic from the terminal with this command:

$ mosquitto_sub -h public.cloud.shiftr.io -p 1883 -t "nrr/feather/#" -u public -P public -vThe # at the end listens to any topic that starts with nrr/feather/. This way I could listen to the data being published by the feather board! The output looked like this:

nasif:~/ $ mosquitto_sub -h public.cloud.shiftr.io -p 1883 -t "nrr/feather/#" -u public -P public -v

nrr/feather/random 30

nrr/feather/mac dc5475c108d8

Finishing the Vending Monitor API

With the skeleton of the API set up, I decided the best use for it was to emulate the use of the first ever webcam, which was used to monitor a coffee pot in the University of Cambridge.

For an ITP version of this, I decided the camera should monitor the vending machine. Specifically the Coke Zero stock, which is only available on one spot of one machine and consistently runs out.

I 3D modeled and printed a small case for the esp32 camera and placed it in front of the vending machine.

The camera gets the following view of the machine:

I also fleshed out the API to make more sense, here is the documentation which can also be reached by visiting http://pipes.nasif.co/vending/docs:

VENDING MONITOR API DOCUMENTATION

========================

BASE URL: http://pipes.nasif.co/vending

ENDPOINTS

---------

1. GET /

Check vending monitor camera status and get latest snapshot info

Response (Online):

{

"status": "Online",

"snapshot": "https://example.com/vending/stock",

"uploadTimeUnix": 1700000000000,

"uploadTimeNYC": "11/23/2025, 3:45:00 PM"

}

Response (Offline):

{

"status": "Offline",

"message": "Camera has not sent an image in over 10 minutes",

"lastUpdate": "11/23/2025, 3:30:00 PM",

"minutesAgo": 15

}

Example:

curl http://pipes.nasif.co/vending/

2. GET /stock

Get the full webcam snapshot image

Returns: JPEG image

Example:

curl http://pipes.nasif.co/vending/stock -o snapshot.jpg

3. GET /cokezero

Get cropped image (35x90px) of Coke Zero stock area

Returns: JPEG image

Example:

curl http://pipes.nasif.co/vending/cokezero -o cropped.jpgAs can be seen, the most useful endpoint is the one that shows the current stock of coke zero in the vending machine. As of this writing, we're out :(

Week 10 Launching an API

For my API, I decided to keep working with the ESP32CAM. I modified some code from random nerd tutorials that makes the the module do a POST request to a server with the captured image on an interval of time. After some testing, I decided to add an API key for it, so that only clients with said key can actually post to my server.

View Arduino Code:

#include <Arduino.h>

#include <WiFi.h>

#include <WiFiClientSecure.h>

#include "soc/soc.h"

#include "soc/rtc_cntl_reg.h"

#include "esp_camera.h"

const char* ssid = "YOUR SSID HERE";

const char* password = "YOUR PASSWORD HERE";

String serverName = "yourseverhost.com";

String serverPath = "/the/path/to/your/api/endpoint";

const int serverPort = 443; // HTTPS

WiFiClientSecure client;

// CAMERA_MODEL_AI_THINKER

#define PWDN_GPIO_NUM 32

#define RESET_GPIO_NUM -1

#define XCLK_GPIO_NUM 0

#define SIOD_GPIO_NUM 26

#define SIOC_GPIO_NUM 27

#define Y9_GPIO_NUM 35

#define Y8_GPIO_NUM 34

#define Y7_GPIO_NUM 39

#define Y6_GPIO_NUM 36

#define Y5_GPIO_NUM 21

#define Y4_GPIO_NUM 19

#define Y3_GPIO_NUM 18

#define Y2_GPIO_NUM 5

#define VSYNC_GPIO_NUM 25

#define HREF_GPIO_NUM 23

#define PCLK_GPIO_NUM 22

const int timerInterval = 15000; // time between each HTTP POST image

unsigned long previousMillis = 0; // last time image was sent

void setup() {

WRITE_PERI_REG(RTC_CNTL_BROWN_OUT_REG, 0);

Serial.begin(115200);

WiFi.mode(WIFI_STA);

Serial.println();

Serial.print("Connecting to ");

Serial.println(ssid);

WiFi.begin(ssid, password);

while (WiFi.status() != WL_CONNECTED) {

Serial.print(".");

delay(500);

}

Serial.println();

Serial.print("ESP32-CAM IP Address: ");

Serial.println(WiFi.localIP());

camera_config_t config;

config.ledc_channel = LEDC_CHANNEL_0;

config.ledc_timer = LEDC_TIMER_0;

config.pin_d0 = Y2_GPIO_NUM;

config.pin_d1 = Y3_GPIO_NUM;

config.pin_d2 = Y4_GPIO_NUM;

config.pin_d3 = Y5_GPIO_NUM;

config.pin_d4 = Y6_GPIO_NUM;

config.pin_d5 = Y7_GPIO_NUM;

config.pin_d6 = Y8_GPIO_NUM;

config.pin_d7 = Y9_GPIO_NUM;

config.pin_xclk = XCLK_GPIO_NUM;

config.pin_pclk = PCLK_GPIO_NUM;

config.pin_vsync = VSYNC_GPIO_NUM;

config.pin_href = HREF_GPIO_NUM;

config.pin_sccb_sda = SIOD_GPIO_NUM;

config.pin_sccb_scl = SIOC_GPIO_NUM;

config.pin_pwdn = PWDN_GPIO_NUM;

config.pin_reset = RESET_GPIO_NUM;

config.xclk_freq_hz = 20000000;

config.pixel_format = PIXFORMAT_JPEG;

// init with high specs to pre-allocate larger buffers

if(psramFound()){

config.frame_size = FRAMESIZE_SVGA;

config.jpeg_quality = 10; //0-63 lower number means higher quality

config.fb_count = 2;

} else {

config.frame_size = FRAMESIZE_CIF;

config.jpeg_quality = 12; //0-63 lower number means higher quality

config.fb_count = 1;

}

// camera init

esp_err_t err = esp_camera_init(&config);

if (err != ESP_OK) {

Serial.printf("Camera init failed with error 0x%x", err);

delay(1000);

ESP.restart();

}

sendPhoto();

}

void loop() {

unsigned long currentMillis = millis();

if (currentMillis - previousMillis >= timerInterval) {

sendPhoto();

previousMillis = currentMillis;

}

}

String sendPhoto() {

String getAll;

String getBody;

camera_fb_t * fb = NULL;

fb = esp_camera_fb_get();

if(!fb) {

Serial.println("Camera capture failed");

delay(1000);

ESP.restart();

}

Serial.println("Connecting to server: " + serverName);

client.setInsecure(); //skip certificate validation

if (client.connect(serverName.c_str(), serverPort)) {

Serial.println("Connection successful!");

String head = "--ESP32CAM\r\nContent-Disposition: form-data; name=\"imageFile\"; filename=\"esp32-cam.jpg\"\r\nContent-Type: image/jpeg\r\n\r\n";

String tail = "\r\n--ESP32CAM--\r\n";

uint32_t imageLen = fb->len;

uint32_t extraLen = head.length() + tail.length();

uint32_t totalLen = imageLen + extraLen;

client.println("POST " + serverPath + " HTTP/1.1");

client.println("Host: " + serverName);

client.println("Content-Length: " + String(totalLen));

client.println("X-API-Key: YOUR API KEY HERE");

client.println("Content-Type: multipart/form-data; boundary=ESP32CAM");

client.println();

client.print(head);

uint8_t *fbBuf = fb->buf;

size_t fbLen = fb->len;

for (size_t n=0; n<fbLen; n=n+1024) {

if (n+1024 < fbLen) {

client.write(fbBuf, 1024);

fbBuf += 1024;

}

else if (fbLen%1024>0) {

size_t remainder = fbLen%1024;

client.write(fbBuf, remainder);

}

}

client.print(tail);

esp_camera_fb_return(fb);

int timoutTimer = 10000;

long startTimer = millis();

boolean state = false;

while ((startTimer + timoutTimer) > millis()) {

Serial.print(".");

delay(100);

while (client.available()) {

char c = client.read();

if (c == '\n') {

if (getAll.length()==0) { state=true; }

getAll = "";

}

else if (c != '\r') { getAll += String(c); }

if (state==true) { getBody += String(c); }

startTimer = millis();

}

if (getBody.length()>0) { break; }

}

Serial.println();

client.stop();

Serial.println(getBody);

}

else {

getBody = "Connection to " + serverName + " failed.";

Serial.println(getBody);

}

return getBody;

}

On the server side, I made a simple node app that would use express to listen on port 3005 to POST requests and GET requests. For POST, it checks the API key and then promptly saves the received image, ovewriting the last one. For GET requests, it sends back information about the picture (time it was uploaded and url to the image).

Once the code was ready, I used scp to copy it into my server. I wanted to give

systemd a try so I followed some instructions and saved my code into the

/opt/ folder, which stands for add on applications. Once there, I made sure my user owned the

file and then created a systemd .service file in

etc/systemd/systems/name.service. The file looked like this:

[Unit]

Description=NAME OF APPLICATION

After=network.target

[Service]

Type=simple

User=YOUR_USERNAME

WorkingDirectory=/opt/<directory of your app>

ExecStart=/usr/bin/node /opt/<directory of your app>/index.js

Restart=always

RestartSec=10

StandardOutput=journal

StandardError=journal

SyslogIdentifier=identifier-for-your-app

# Environment

Environment=NODE_ENV=production

Environment=PORT=<port number of your app>

[Install]

WantedBy=multi-user.target

After saving the file, I ran:

$sudo systemctl daemon-reload

$sudo systemctl enable identifier-for-your-app

$sudo systemctl start identifier-for-your-appThis started my app with auto restart in case something failed.

View node.js code

const express = require('express');

const fs = require('fs');

const path = require('path');

const app = express();

const PORT = 3005;

// Middleware to parse multipart form data

app.use(express.raw({ type: 'multipart/form-data', limit: '10mb' }));

// Store metadata about the last uploaded image

let lastImageMetadata = {

timestamp: null,

uploadedAt: null

};

// Ensure uploads directory exists

const uploadsDir = path.join(__dirname, 'uploads');

if (!fs.existsSync(uploadsDir)) {

fs.mkdirSync(uploadsDir);

}

const imagePath = path.join(uploadsDir, 'latest.jpg');

const API_KEY = 'your-secret-key-here';

// POST endpoint to receive images

app.post('/shot', (req, res) => {

// Check API key

const providedKey = req.headers['x-api-key'];

if (providedKey !== API_KEY) {

console.log('Unauthorized access attempt');

return res.status(401).send('Unauthorized');

}

try {

const boundary = req.headers['content-type'].split('boundary=')[1];

const bodyString = req.body.toString('binary');

// Find the JPEG data between the boundary markers

const jpegStart = bodyString.indexOf('\r\n\r\n') + 4;

const jpegEnd = bodyString.indexOf('\r\n--' + boundary + '--');

// Extract the JPEG binary data

const jpegData = Buffer.from(bodyString.substring(jpegStart, jpegEnd), 'binary');

// Save the image (overwrites previous)

fs.writeFileSync(imagePath, jpegData);

// Update metadata

const now = new Date();

lastImageMetadata = {

timestamp: now.toISOString(), // UTC timestamp

uploadedAt: now.toLocaleString('en-US', { timeZone: 'America/New_York' }) // NYC time

};

console.log(`Image received and saved at ${lastImageMetadata.uploadedAt}`);

res.status(200).send('Image uploaded successfully');

} catch (error) {

console.error('Error processing image:', error);

res.status(500).send('Error processing image');

}

});

// GET endpoint to retrieve image info

app.get('/photo', (req, res) => {

if (!fs.existsSync(imagePath)) {

return res.status(404).json({

error: 'No image available',

message: 'No image has been uploaded yet'

});

}

// Check if image is older than 10 minutes

const now = new Date();

const lastUpdate = new Date(lastImageMetadata.timestamp);

const timeDiffMinutes = (now - lastUpdate) / (1000 * 60);

if (timeDiffMinutes > 10) {

return res.status(503).json({

error: 'Camera offline',

message: 'Camera has not sent an image in over 10 minutes',

lastUpdate: lastImageMetadata.uploadedAt,

minutesAgo: Math.floor(timeDiffMinutes)

});

}

const protocol = req.protocol;

const host = req.get('host');

const imageUrl = `${protocol}://${host}/camera/image`;

res.json({

imageUrl: imageUrl,

timestamp: lastImageMetadata.timestamp,

uploadedAt: lastImageMetadata.uploadedAt,

message: 'Latest webcam image'

});

});

// Serve the actual image file

app.get('/image', (req, res) => {

if (!fs.existsSync(imagePath)) {

return res.status(404).send('No image available');

}

res.setHeader('Content-Type', 'image/jpeg');

res.sendFile(imagePath);

});

app.listen(PORT, () => {

});This gave shape to an API with the following structure:

- Base URL: pipes.nasif.co/camera

- /shot (POST): Internal use only, for posting images from the camera.

- /photo (GET): Retrieve metadata about the latest image. This is a JSON response with the following schema:

{ "imageUrl": "url to the image", "timestamp": "UTC time it was uploaded", "uploadedAt": "MM/DD/YYYY, HH:MM:SS AM/PM in NYC timezone", "message": "Latest webcam image" } - /image (GET): Retrieve the latest image from the camera.

Lastly, I created a reverse proxy using caddy so that the /camera path would lead to the 3005 portwhere my express app was running. With this going, I left the ESP32CAM connected and running, while facing the stack of books on my staging desk for now, until I find a good place to put it that won't intrude in anyone's privacy other than my own.

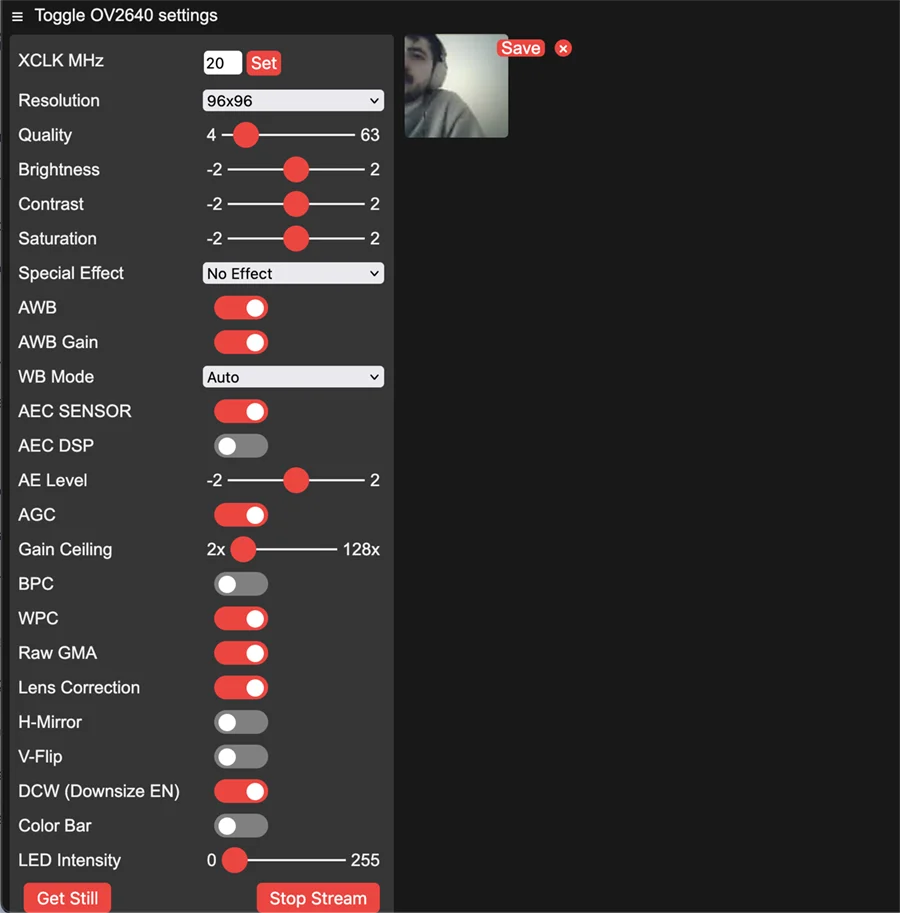

Week 08 ESP32CAM as an IP Camera

For this assignment on connecting a microcontroller to the network, I decided to give the ESP32-CAM module a try since I've been planning some projects using live webcams/IP cameras.

After reading online a bit about the module, I found that by setting my board in the arduino IDE as 'AI Thinker ESP32CAM', I had access to a bunch of examples for it. One of them was the CameraWebServer, which turned the module into an IP camera, printing the IP into the serial monitor on wifi connection.

At first I couldn't get it to recognize the camera. But reading online, this error seemed to be due

to the camera being connected on a different pin than the code was using. So I went into the

board_config.h tab to see which pins were in use and realized I needed to

#define CAMERA_MODEL_AI_THINKER to use the right pins.

While streaming did work, it was somewhat shotty; skipping a lot of frames, stopping stream altogether. Some of these issues and fixes are documented in this gituhb issue, and someone wrote a different firmware to stream using MJPEG which is worth testing out.

Week 07 Setting up a static web server

In the past, I have worked a lot with front end development but rarely have I had to setup a web server from scratch with nginx or apache. Reading Niko's blog on caddy made me want to give it a shot, so I went to the official installation guide.

While I could use homebrew to easily install it, the official way to do it is using

apt, which is nice because then I can keep it in the same package manager as I'm

keeping everything else. The instructions on the caddy side were kind of opaque, so I wrote a short

explainer for my future reference:

Setting up Caddy

Before starting, make sure your firewall ufw is set to allow connections on http (port 80) and https (port 443).

Caddy is not part of the apt repos that are checked when you run apt-get, therefore we

first need to add caddy's repo to the system's sources, so when we install it, it knows where to

look for it. To do so, we have to start with some setup:

$ sudo apt install -y debian-keyring debian-archive-keyring apt-transport-https curlThis line installs a few dependencies:

- debian-keyring and debian-archive-keyring: these update your system's set of cryptographic keys. The point of these is so that, when running apt, they are used to confirm the package being installed is authentic and unmodified (based on the keys).

- apt-transport-https: allows apt to get packages over https, which is included in modern ubuntu systems but it doesn't hurt to check

- curl: used to make network requests. Also probably already installed.

After this setup, we run

$ curl -1sLf 'https://dl.cloudsmith.io/public/caddy/stable/gpg.key' | sudo gpg --dearmor -o /usr/share/keyrings/caddy-stable-archive-keyring.gpgThis requests for caddy's GPG package key, used to confirm it is authentic. It is done with flags

'1'(force https), 's' (silent, the opposite of verbose) , 'L' (follow redirects to reach the key in

its current url) and 'f' (fail silently on HTTP errors like 4xx/5xx). The data is piped to the gpg

tool, using flag 'dearmor' to convert the key into a binary file with extension .gpg as apt likes

it. This gpg file is saved then in the /usr/share/keyrings/ path.

$ curl -1sLf 'https://dl.cloudsmith.io/public/caddy/stable/debian.deb.txt' | sudo tee /etc/apt/sources.list.d/caddy-stable.listThis gets Caddy's APT repository definition text, which contains the urls where your system should get any caddy repo it needs, plus information on which keys to trust. The curl uses the same flags as the last request but now we pipe to tee, which takes the information coming from the request as a text stream and saves it into a .list file as apt likes it.

Now we need to set permissions for those files we have created

$ sudo chmod o+r /usr/share/keyrings/caddy-stable-archive-keyring.gpg

$ sudo chmod o+r /etc/apt/sources.list.d/caddy-stable.list

Chmod is used to set permissions on the files. o+r means 'others' (not the owner of the file) have read permission. This way apt can always access the information in these files

Now we are ready to install caddy, let's do

$ sudo apt update

$ sudo apt install caddy

As soon as it's done, caddy will start running. You can see for yourself by requesting your ip on a browser! This will also persist across system reboots.

After this, you may follow the instructions that caddy shows on your site. If you do, the server will

host files directly from the /var/www/html/ path. You can use an ftp client like

cyberduck to connect through SFTP (make sure you set up ufw to allow connections on

port 22) using your same ssh keys and upload files to said path.

What to do with the server

For now, I routed the subdomain pipes.nasif.co to my server on digital ocean and added a sketch I made for the time course: a clock that shows you the solar time on your exact longitude, which is not always aligned to the time in your time zone.

As we move forward, I'd like to turn the server into a WebRTC Signaling Server, so I can create projects that communicate with each other peer-to-peer through webrtc. That's why I called it 'pipes'. I believe this sort of communication may be the fastest way to make two client devices talk to each other.

Week 04 Readings on 325 Hudson, Colocation Facilities, Fiber Optics and a look at Submarine Cables

Notes on Submarine Cables

- Looking at the cables in South America, a particular one caught my attention: a cable going through the Amazon river, almost all the way in.

- This cable is called "Infovia 00", a 705km optical cable in the bed of the amazon river installed in 2022.

- According to this article, it is part of an upcoming network of 8 riverbed cables that will connect people in the area to high speed internet.

- From the Colombian side, Infovía 02 was connected on July 1st 2024 from Tabatinga, BR to Leticia, the capital of the Amazon state in Colombia, according to bnamericas.

- This is amazing news since the Amazon state has long been a very hard to reach "last mile" for many different services, due to the terrain complexities (and special conservation care) or the Amazon rainforest. With this cable, about 10,000 homes will have access to high-speed internet.

Notes on Colocation Facilities

- The fact that AS connected to the same access switch on the IXP can communicate "locally" is very interesting. It extends the idea of what 'local' means in terms of networks. Normally I associated this with a network running of the same router (or routers and repeaters connected together sharing the same SSID), but now local could be considered all of the networks connected to the same switch. Cloudflare's article on the subject takes it even further saying "An IXP is no different in basic concept to a home network, with the only real difference being scale"

Notes on Fiber Optics

- Back in Bogotá, everytime I moved and had ETB (the city's public ISP) connect my apartment to the internet, they would always leave a long cable wrapped in a wide loop connecting the modem to the wall. The technician strongly cautioned me that this was an extremely fragile fiber optic cable owned by ETB and I would have to pay a fee to if I didn't return it with the end of my service. This is the cable on an online marketplace in Colombia, selling for ~$19.50.

Notes on 325 Hudson Carrier Hotel

- It is surreal to think about how we can send light across these long distances through pipes.

- I wonder what the batteries in power rooms A and B are for. I imagine they would be a backup in the case of an outage, but I'm not sure if there would be other reasons to keep these batteries.

Week 03 Readings on Internet Governance, DNS and Internet as Public Utility

Notes on Bodies of Governance and DNS

- It feels unusual to see how the whole world comes together in groups to manage and govern the internet, outside of a political context like the UN. It is probably not the only instance of this.

- It's interesting to see how, because of an original limitation in the initial design of the UDP packets for DNS architecture, we can only have 13 root DNS servers. Also how using anycast has allowed multiple servers to share the same IP address, effectively overcoming some of the limitations.

- It's not very clear to me how the DNS resolver and the root server relate to each other and why we need the resolver to begin with. In my mind, the root server could go a step farther and not only resolve the TLD but also the rest of the domain?

- I find it interesting that so many proposals for TLDs have been done, and the fact that they have been approved. While I can understand how difficult (and problematic) it could be to argue why not to accept a particular proposal, I wonder what benefits it really brings to have this many.

Notes on The Internet as a Public Utility

- Reading on how costs relate to the use of the infrastructure to connect to the internet, I remember last week's reading by Paul Baran. In a section, he mentioned how routes are optimized to do the least hops possible to the destination, but also offered an alternative that could instead prioritize the cheapest route. I wonder if there are current cases of this implementation.

- Atleast for the last two decades, there has been debate about whether to privatize Bogotá's public internet service provider ETB. Yearly deficits have led the company to be unable to fulfill scheduled updates to its infrastructure and some proponents argue that privatization could inject funds for this plus generate an additional revenue for the city. However, it is also clear that private interests might not prioritize the "last mile" expansions that public plans do. I wonder what differences there are with the case of Chattanooga.

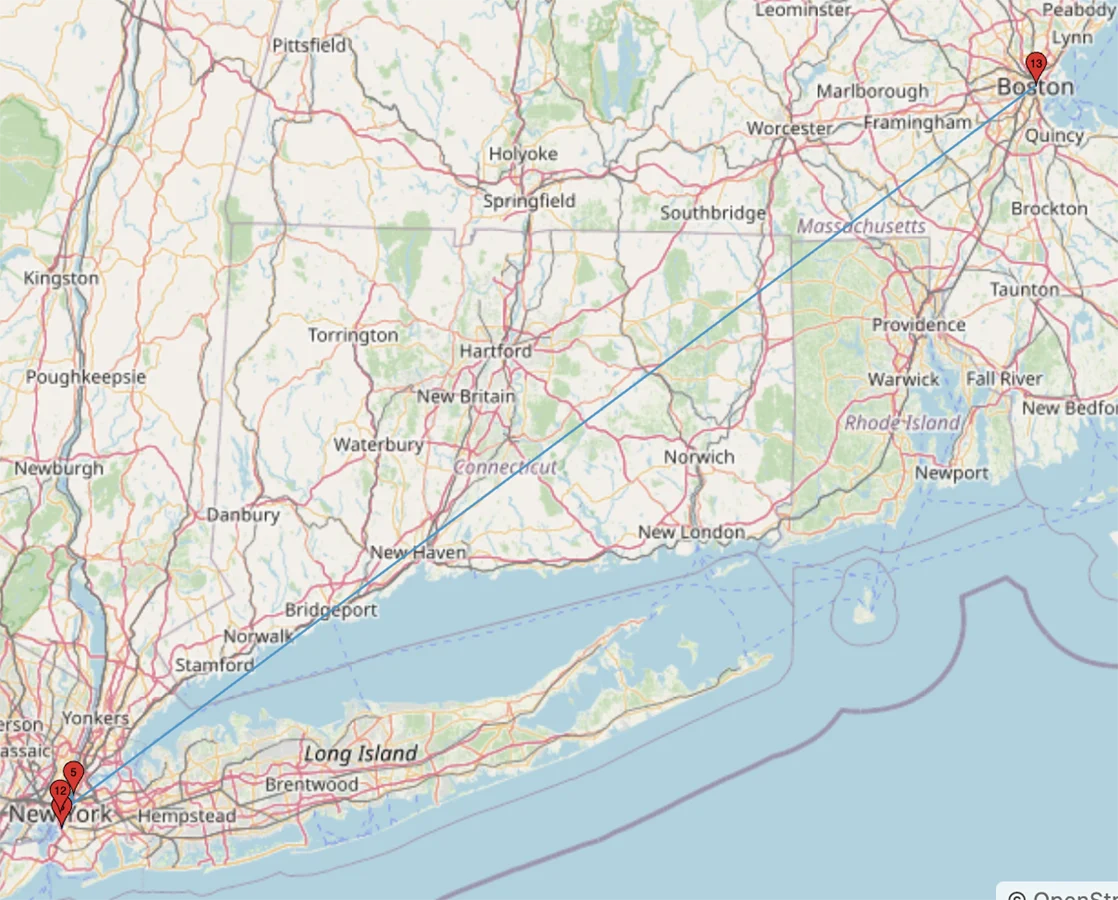

Week 02 Traceroute and Network Mapping

Tracerouting to a Phone on IPV6

Reading the prompt, I became intrigued by the idea of tracerouting to my phones IP (given my phone is connected to cellular data). I got my phone's public address from ip2location.com, which interestingly only gave me an IPV6 address.

I found the traceroute6 command and gave it a try but immediately got:

connect: No route to host

Reading more on it, and seeing the same result when running traceroute6 google.com, I

came to the conclusion that my ISP does not have IPV6 support on for my home network.

So I tried instead to use my phones IPV4, and got up to 13 hops before getting into private hosts without response:

Identifying Autonomous Systems

After this, I attempted to traceroute to some sites I frequent. Very quickly I realized that almost every single time I would get timeouts on hops. So I decided to instead focus on the AS numbers and trying to identify what Autonomous Systems I pass through often.

I used traceroute -a host | grep -o '\[AS[0-9]\+\]' for the following sites:

- google.com

- nyu.edu

- github.com

- chase.com

- instagram.com

- amazon.com

- nytimes.com

- itsnicethat.com

A lot of hops returned no answer. But with the ones that did I compiled this list:

[AS7843] 38 Charter Communications Inc (Spectrum) [AS12271] 27 Charter Communications Inc (Spectrum) [AS15169] 9 Google LLC [AS33182] 8 HostDime.com, Inc. [AS3356] 3 Level 3 Parent, LLC [AS32934] 3 Facebook [AS6461] 1 Zayo Bandwidth [AS5773] 1 BCN Telecom Inc

Its no surprise that spectrum is the main Autonomous System I pass through since they are my ISP. The rest of them are also not very unusual, but I would need to make a larger amount of traceroutes to get a better picture of my common networking routes.

Cloudflare's AI Labyrinth

Last week in class I mentioned having read about cloudflare intentionally trapping AI scrapers into a loop of hops and redirects. I looked into it again, reading Cloudflare's blog post on the subject.

How I explained it was not entirely accurate. The way it is explained in the linked article says that bots are sent into AI generated web pages that have internal links to more AI generated pages, essentially making them crawl sites that are not a real part of the visited site. In doing so, they waste their resources and time. Cloudflare also uses this a specialized tool to identify aggressive AI crawlers, which are the ones that will go deeper into the labyrinth.

Week 01 Setting up a host, firewall and readings

Droplet and Server

After being put on an activation hold by Digital Ocean for a day, everything went smoothly. I

configured the server with an ssh key but later realized that, since that key was for the

root user, and I preferred not reusing it, I'd have to make a new key.

So I followed digital

ocean's ssh key guide and created a new one, saving the passphrase to my keychain and

setting up my .ssh/config file nicely:

Host uplink

HostName <server public IP>

User <username>

IdentityFile <path to private key>

AddKeysToAgent yes

UseKeychain yes

so I only have to type:

$ssh uplinkTo log into my server. This took longer than I had planned but felt very nice to have a clean setup.

I then had to briefly stop working on the server and forgot to power it off. When I came back a couple of hours later I setup the firewall and in a few minutes It had already blocked 64 connections. I wonder what could have been happening in that time the server was running without a firewall.

Currently running the server for a few days before I do the firewall analysis

Firewall Log Analysis

After running the server for a couple of days, I passed the logs to an excel sheet for analysis. Replacing spaces with tabs didn't work well for me when passing it to excel, so I instead replaced them with commas and used excel's text-to-columns feature.

- Blocked Connections: 3849

- Number of Unique IPs: 2116

- Most attempts from one IP: 54 attempts

- Location of said IP: Ashburn, VA Amazon Data Services Northern Virginia

Since its been visiting my IP so much, I decided to visit theirs. Curiously, it served a directory list.

I opened each file. Turns out that one file contains a bunch of entries with the format:

Discovered open port 5900/tcp on X.X.X.X

So it seems this is some sort of scanner, discovering IP addresses where port 5900 is open. I looked it up and this is the port used by VNC. I've used VNC before to connect to a raspberry pi, so I imagine all of these IP addresses are allowing connections to remote control of a graphical desktop interface. Looking up the IP in AbuseIPDB said it has 16% confidence that it is an abusive host.

This made me worry a bit more about the short time my server was running without a firewall, so I

looked it up and found you can check the authentication logs to see successful logins. I used

grep to only see lines with successful logins and everything made sense with when I

have logged in.

Notes on Deb Chachra's How Infrastructure Shapes Us

- Chachra's argument on how infrastructure, something 'invisible'/taken for granted, deeply shape our lives resonates strongly with a lot of Georges Perec's philosophy of the infraordinary. I especially enjoy his text Approaches to What?, an invitation to look closely at the overlooked.

- Moving to NYC and away from the systems I'm accustomed to in Colombia has made it at times easy to identify the differences in infrastructure that shape our experience:

- Surge pricing on electricity: seasons mean energy usage varies across the year. So the required size of the grid is unclear, it is strained in the winter and underused in the summer.

- Lack of water meters: water tends to be included in rent. Individual water usage is not tracked and not charged. In some cases there is little deterrence from irresponsible consumption and waste.

- Same with hot water. Heating is done in the scope of the building. In Colombia, water heating is done through each apartment's water heater.

Notes on Why Google Went Offline Today and a Bit about How the Internet Works

- I had no idea about the Border Gateway Protocol (BGP), and Autonomous Systems (AS) numbers. I knew how the internet was a network of networks but didn't have a very clear image of how these get connected.

- Would love to know more about where in this whole process DNS fits in with regards to BGP.

Notes on We finally know what caused the global tech outage – and how much it cost

- It's intriguing to see how software can also be infrastructure, and how certain software infrastructures operate at a global level while being maintained by a single company. I assume, mostly due to lack of alternative softwares in niche markets and user reluctance to upgrade/migrate.